Currently, as Donald Trump famously said, everything is computer. But also (and eventually I’ll finish that post), everything is gender. And nothing is more computer, and more gender, than artificial intelligence. Plus I’m preparing a class that involves AI and gender (here’s a seminar, in Spanish, that you can sign up for if you’re interested in the general topic of “gender”), so I had all the research done beforehand. And also because the article I was working on last week (about politics) glitched out on my laptop and I want to take a breather there.

Write it, cut it, paste it, save it, load it, check it, quick – rewrite it

The first question, which I think is the most obvious one, is how AI will change the labor market in general. This has been a longstanding topic on this post (a more general post, one about AI and employment, and one about the cheating debacle last month), and also because it’s fundamental to understand the most important aspect of shifting gender relations: economics (and also because I don’t run a gender studies blog, anyways).

The first thing to note is the same old, same old about tasks, skills, and jobs. A job is a bundle of tasks. Each task needs a skill to be completed. A new technology can replace a skill, or it can complement it; if it replaces enough skills that all tasks a job previously had assigned are done by the machine, we consider the job “automated” and it disappears. When people say “70% of jobs are at risk of automation”, what they actually mean is “70% of jobs contain tasks that could plausibly be automated”. This dynamic is known as skill-biased technical change, where the level and amount of skills required in a profession can change from a new technology. If you look at the specific case of ATMs, what happened is that it replaced “bank tellers” as a job because they could do their tasks better (substitutes), but then new jobs developed where the ATMs were complements - in particular, more sales-focused bank clerk jobs. One example of complementarity is that GitHub Copilot (an AI assistant programmer) made software developers complete a series of tasks in half the time as a control group. On the contrary, ChatGPT managed to reduce demand for freelance labor quite substantially, for example for translation services, since the tasks are easy and cheap to automate.

Skill-biased technical change can also affect the amount of skills: there’s a study of Kenyan entrepreneurs that finds that receiving advice from AI worsened performance gaps between high and low performers, but only because because the high performers were better at following the correct advice from AI. But the findings are generally the other way: studies typically find that productivity doesn’t increase that much from AI, that the gains are mostly driven by automating away “tedious” tasks like editing and drafting, emails, document creation, or retrieving information from data sources. In large part, these gains come not from letting top employees pull away from everyone else, but rather, they come from letting bottom performers catch up, especially in tasks they have less experience in. Obviously AI has changed a lot over time, and has become much more widespread: for example, between 2022 and December 2024, the share of people who use AI rose from 0% to around 14%. And between January and April 2025, that share rose from 14% to 43%, and is probably already a majority - in contrast, this process took 6 years for social media, 12 years for the internet, and 40 years for electricity.

In addition to the “micro” effects on jobs, skill-biased technical change can have pretty major “macro” effects on the structure of the economy: starting in the 1970s, advanced economies have started having an increasing premium on “human capital” (education) over other forms of skills. This led to a phenomenon called “job polarization”, which came from the “middle” of the job distributions (basically, good jobs that didn’t need degrees) being hollowed out by technological changes (automation and computerization), offshoring and trade, and the fact that the “knowledge sector” itself boomed. Something really important to note is that this was worsened by macroeconomic issues: between 2007 and 2016, the US labor market created 8.4 million jobs for college graduates, but lost 5.5 million for non-graduates, partly due to changing educational requirements but also sustainably lower demand.

I’ve also written about AI specifically and how it plays out before, and I’m kind of skeptical that AI will be “the end of work” or something - a lot of it depends on the specific complementarities of AI and various skills and jobs, organizational concerns about the structure of corporations, and bigger-picture macro factors that I’ll get to in a bit. If you look at some recent labor market trends, what you find is that labor polarization changed up a bit, particularly at the low end of the labor market (such as retail), and importantly, that the entry-level job market is much worse: a lot of low-level occupations in coding, law, and more are getting crowded out by a mix of general economic pessimism and AI.

Alexa versus Hal 3000

So, what is AI going to do to our genders? We have to start with how the changes in the economic structure of developed nations broke down by gender, and then move on from there.

These changes in economic structure and labor market outcomes also resulted in, you guessed it, very uneven effects by gender1. The big one is that automation, deindustrialization, and a rising skill premium in the services sector had men as its big losers and women as its big winners (I mean, I wrote about this on Vox!). This has a number of very complicated causes: the decline of manufacturing (and construction - there’s always a housing angle) affected men the most, while the skills in demand in the manufacturing sector itself also changed to require more education. Cuts to social services also disproportionately affected men, since they were big recipients of unemployment insurance, for example, and would commit crimes otherwise. Men are also at a disadvantage in the “care economy”, mostly because they just don’t want to work “pink collar” jobs because of cultural norms. Additionally, men are doing worse than women in education (for a lot of really complicated reasons), which puts them at a disadvantage in the knowledge sector - especially because “social” skills have a big premium there, and women just be yapping. Additionally, the decline of sexism in the workplace means that men lose out on their (unearned) gender benefits, while the reluctance of boomer bosses to retire prevents younger men from getting top jobs.

So, at the micro level, we can safely say that there won’t be much of a shift in the manual sector from AI - for example, Japanese nursing homes attempted to automate care jobs and it was a disaster. The effects are going to come from the knowledge sector, which is both the wealthiest and the most “female” one2. If you look at the list of effects above, nothing jumps out and screams GENDER. So what are these effects going to be?

The one big effect that I do think people don’t really discuss that much is AI-driven discrimination. A while back I read a book titled Weapons of Math Destruction, and the general premise was, basically, that algorithms can be trained on biased or discriminatory data, and so they can “neutrally” produce the same results as manual discrimination. My friend Soumitra Shukla has a paper about this: basically, if you look at hiring processes in firms, they tend to discriminate based on culture at the end of the hiring process, by inferring (in this case caste) from personal interviews. Now imagine that the caste you want to discriminate against all have last names that end in “E”; if you handed over the list of everyone who had an interview and how well they did to a custom-built AI, what would happen is that it would judge everyone neutrally until the personal interview, and then would drop everyone whose last name ends with E because the company just doesn’t hire “people of E”. Algorithmic discrimination, thus, became a huge issue back in like 2017/2018, with questions about transparency and accountability, how it would avoid repeating previous errors from the data set, and what procedures could be implemented to prevent it. Studies found it applied to gender and to race, at least. This extends to AI, which is much more powerful that the rinky dink algorithms we were talking about here, particularly given the importance of cultural norms and the history of straight-up discrimination in the workplace for women’s labor force experience.

Let’s assume that women still get hired and look at how they’d do under AI. Obviously women and men have around the same level of skill at office jobs, and a lot of the differences in participation in the knowledge economy are driven by culture and preferences (more on how they could change later), so it’s not like ex ante it would have any gendered effects. One exception is that, since motherhood accounts for a lot of the gender wage gaps because women work less both in hours and for fewer years, then AI could boost women’s labor market prospects by letting them catch on to more experienced men. So we are just like, spitballing here, but if AI on its own doesn’t necessarily have any especially gendered effects on performance, the question is whether men and women are using it differently.

And yes, they are: women just use AI at way lower rates than men. There’s, roughly, a gap between 10 and 40 points in AI adoption, and at the same time, literally no AI website or apps has even close to a majority of women users. This varies a lot by country - in Indonesia, it’s a 50/50 split, and it’s close to that in Singapore, Denmark, Argentina, Chile, and Canada. Meanwhile, in the US it’s around 70-30, and it’s closer to 3:1 in India, Egypt, and Pakistan. Women miss out on AI across cultural contexts and industries, and the one exception are American tech employees, where senior women use AI so much that they offset junior women using it way less. Studies from Denmark also found that women are not only less likely to use ChatGPT at all, they also use it much less in terms of time and for fewer tasks. Female students are also 25% less likely to report using AI, and are also more likely to question using it ethically and practically. This is especially serious when AI is banned, because men are just much more likely to keep using it than women (46% of women do, versus 66% of men).

In general, women tend to report that they are more likely to know how to use AI and its relative benefits, they trust AI more when they use it, and think they’re more successful and persistent when they use AI - they’re just much less likely to use AI, much less aware of the risks, and need more training and reassurance on AI. Both men and women are worried about the risks of mistakes, have difficulties using AI at times, have similar concerns about the reliability of AI, and are equally concerned abut losing their jobs, becoming dependent on AI, and being misled by it. The really important thing is that knowing how to use AI is a big deal for employers, so women do really need to get up to date on it, lest it widen existing differences.

The important question then is actually why women just use AI much less than men. The actual studies on the topic cite the good ole’ fashioned explanations: lower self-confidence among women, less awareness of how to apply AI, and higher risk-aversion. In particular, I have to note that women are just less likely to use any new technologies and seem to wait until it’s socially acceptable first: men tend to be influenced to use a technology if it seems useful, while women balance ease of use with perceptions of social norms. A lot of this stuff is just as old as time: women tend to be penalized more heavily for mistakes at work, which creates a lot of apprehension towards “high agency” behavior and a lot of risk aversion, for example during salary negotiations or against taking leadership roles.

So, overall, the question of gender and AI is pretty interesting: women could face algorithmic employment discrimination, first, from AI-driven hiring (and firing) decisions, but also could help revert existing inequalities between men and women if and only if women take up AI - which they are not doing because of lack of appropriate training and information, and also really stupid social norms and dynamics. So AI might actually make micro-level gender inequality worse.

Don't act like I never told ya

This was all micro-level stuff, and that’s fine. But what about the macro level? Because you could hem and haw about computers and gender, but you’d miss a lot of the picture if you didn’t include that computers also made the knowledge economy a lot bigger than the manual economy.

Well, a lot of this is just answering micro-level questions like “what is going to be the impact of AI on productivity” (see above), but I’ve staked my case that I don’t think we’re going to enter an era of infinite LLM-driven growth. When Paul Krugman and Robert Solow were dunking on the effect of computers in productivity, it was in a context where people were saying that the internet would permanently increase productivity growth to 4% - instead, it increased it to 2% for the better part of 20 years. The big question here is what happens to productivity, and there’s two big perspectives that literally made a bet on it: the first, from Robert Gordon, is that technologies have a pretty defined lifespan where they increase productivity for a while as they’re adopted, and then taper off once they’re exhausted. These gains are, I think, pretty well established: adopting new technologies is crucial for productivity growth, and this also usually involves big changes to economic structure. Particularly relevant is the idea that scientific progress, and thus productivity growth, are slowing down substantially (yeah, that’s a link to a post about Oppenheimer), as well as concerns over ageing, demographics, and educational attainment3. The other perspective, which is I think a bit more optimistic, comes from Erik Brynjolfsson (the guy who made the bet with Gordon), and it’s the idea that there’s these big technologies that change how the economy is structured on its basic level (called General Purpose Technologies or, quite funnily, GPTs), which do exhibit the behavior Gordon describes (called the productivity J curve), but which in many cases is also heavily reliant on intangible assets that show up on macro statistics on a lag - because they increase productivity in two “rounds”, once when they’re created and later on as they mature and get more embedded into the economy. This “faction” has cited AI as a “GPT”, and have been on this since Prince was on Apollonia / since O.J. had Isotoners 2018, when it was just machine learning stuff. You could, however, make an argument about the future role of AI in the scientific discovery process and how it relates to the slowdown in scientific progress, a conversation that has the problem of the most prominent paper on the topic being quite literally AI-driven fraud.

The second category of effects discussed are on inequality. The first source of inequality is in the functional distribution of income: between labor and capital. If AI displaces labor, then capital’s share of income rises rapidly, and you end up with a Victorian Era tier landscape of extreme economic inequality, and where the economy reverses its polarization by turning everyone into a badly paid serf of the ultra-rich. You’d have people doing the job of court jester, or Roman-style tutors, or whatever, and it would mean higher aggregate demand but lower effective demand since most people would make too little to afford anything. A second type of concentration, which is more frequently talked about, is between labor, that is, whether AI would increase income inequality by displacing people from a lot of cognitive employment (Brynjolfsson’s book on the topic inspired this viral YouTube video all the way back in 2014), and by worsening skill gaps and boosting economic concentration.

The effects on net, and on gender, here are… weird, and highly dependent on demand. If inequality increases a lot because the knowledge economy is gutted, then the “winning” sectors will be all personal services, where women reign supreme - except that, since they also pay way less, the gender wage gap would get worse, not better. But if the knowledge economy booms, then women would gain on net from both channels, but the gender wage gap would get really weird. The policy response is also important: if governments redistribute gains between winners and losers, then you could end with both an increase in productivity and an increase in demand that channels into things like the creator economy4. And, since I’ve said it before, the distribution of income between the knowledge and manual services and within each of those industries determined our political dynamics, especially around gender, then we could see a lot of really weird shit, like institutional trust completely vanishing, or the gender political divide exploding.

But a second order macro effect people tend to sort of miss is that, if the functional distribution of income becomes drastically more unequal, the feasibility of democracy would be under question. While it’s not necessarily true that politicians listen to the rich more than voters, it’s also true that distribution of income does affect institutional outcomes: the impact of slavery and indentured labor on Latin American economic performance is in large part mediated by the power that these practices gave to the mining and plantation elites. And obviously, institutional quality is actually related to outcomes and to the viability of democracy.If you consider LLMs as an extractive industry and not as part of the knowledge sector (I’m making a Friedmanite case here), you’d basically get to the idea of taxing their owners to fund a UBI, Alaska style. And like, I don’t think that “AI could end democracy” is that far off - it is, in fact, the explicit argument that Curtis Yarvin and his merry band of “Dark Enlightenment” dipshits5 are making. The “tech right” pretty explicitly wants US democracy to be replaced by an oligarchy that is managed by big money and billionaire interests.

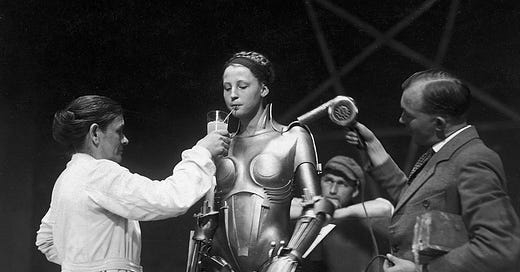

The final stop of this already too long post has to be culture. Donna Haraway, a feminist philosopher, wrote in the 1990s that technology was breaking down three crucial distinctions in society: between human and animal (because of evolution, DNA, etc); between human and machine (pacemakers, glasses, augmented reality), and between the physical world and the non physical world (think, the internet). These distinctions, and other such as gender, race, and class, would be increasingly under strain as technology and the economy changed, and would have to be abandoned for more particularly-inclined and cross-coalitional ones (Hayekian Harawayism? Yglesias-Haraway Thought?). Her solution is socialist feminism or something. Haraway is… not a very clear writer. But the thing is that, economically, this all checks out: technology has changed our genders. This isn’t even about transgender people. If you read between the lines at the history of gender norm changes, what you see is that technologies ranging from “fences for cattle” to the plough to the birth control pill, vacuum cleaners, and the computer changed our economies profoundly and economic changes had ramifications in women’s outcomes in the workforce. Another, much less complicated example is the rise of the “creative class” (previously called by the way less cool name of “the BoBos”, or the Bohemian Burgeoisie): the same changes in the economic structure that I’ve been going on and on about have economically empowered a new social class that have distinct aesthetic and cultural preferences for shit like lattes, museums, and international cuisine. These people, and their taste for gay ass shit like bike lanes and enjoyable nightlife, have drastically reshaped our cities - one of the best examples is Berlin. So if something as stupid as “computer scientists have to live in a handful of superstar cities and they demand to not drive to work” can completely reshape our cities, I think that it can also reshape our understanding of human gender norms when it’s literally machines who are as intelligent as humans and capable of holding conversation.

Conclusion

So, AI is going to change the labor market. If or when it does, it’s going to shift economic power away from people who currently have it, and towards a different group of people - and it’s going to change not just our economies, but our democracies, our cities, and of course, our gender norms. So we have to like, be aware of it and try to balance the powers of the market with the fact that building an economy that’s just a giant plantation for techlords and their drone armies would be, well, bad for 99.999999999% of the human population.

I’m going to be talking about men and women because, let’s face it, if you’re non-binary I don’t think that the China Shock was going to be your biggest concern.

I have to point something out that gets kind of lost in discourse which is that the gender wage gap still exists because 1) feminized service jobs are very underpaid, 2) women tend to choose lower paid fields because they get more life satisfaction from them, and 3) in the cognitive sector, women take a pretty big beating versus men when they have kids (and birth rates for educated women are basically stable. Go yell at waitresses instead). Women’s improvement has been relative since the 1970s, but men are still ahead in absolute terms, they have just not improved their situation much and low-skill men are, on net, worse off.

Where women, on account of being hotter than men, would end up on top as well.

Elon Musk is the Joker of the Tech Right, a stupid clown acting impulsively, while Peter Thiel is more like Lex Luthor (though he is also on crack, to be fair). His plan is basically to build a surveillance state where drones murder you if you say something feminist, I gather.

Great article; though I am somewhat confused about this line:

> and it would mean higher aggregate demand but lower effective demand since most people would make too little to afford anything.

I don't really understand the distinction between aggregate and effective here and the only hits I found searching it up were in relation to marxian stuff.

Thank you for the article, Miss Mindel !

The cornucopia attitude many men have when they can trade effort for risk explains some of the gender gap in AI use. Possibly the Stan and Wendy Dynamic too - delegating tasks leaves one for more supervisory maneuvers. Still, that's screwing with my head - why is it so stark and so weirdly so ?

Most of it is still at the policy level. Hopefully, the fear shocks and the current effect of Twitter becoming a political-epistemic supply chain will take shape enough to buoy anything the AI may/not do. The political marriage of the charismatic and the wonk might make the attention economy a net good - might.

The Republicans of the world will scaremonger once someone asks ChatGPT about switching genders. Going to also be the next Duolingo in terms of force-secularizing good, clean, Christian Muslima.

--------------

Excuse the rest of the juvenilia:

Technology renegotiates with Nature what reality ought to be. But what does an Oracle in the Machine to-go actually accomplish socially other than deliberate mass lobotomy (of mostly men, prolly - anecdote: my friend had a year of using ChatGPT for all his assignments last I checked on him. He's a biz major, so I'm not surprised he's not getting caught.)

Not gonna talk on behalf of womenfolk. So weird.

Belief statements are strange things and I wonder what it'll change or spread. People already commit ad meme-ico and ad google-rico. Ad LLM-ulum, I think, will be worse because it inherently wants to be helpful. It requires framing devices - my favorite is charging my writing as from a third party I dis/agree with on the merits. Pretty shit on most things tho.

But what new onus does it place on the individual ? Social media has made people ask the question of consent/self-invitation so much it's a significant reason that the person that could butt dial the nuclear codes is a quintessential Gen X, Reality TV addled, Clip farming, person that's all about self-invitation and expression of his agency over the concept of permission. Still boggles my mind they wanted to say the word so much they elected one and felt it a virtue to be. Just so retarded.

I'm struggling because I think it largely removes responsibility. Perhaps we'll just have an Oracle of De-fi - ooo, would this be another thing that could reflexively shift the aggregate perception of the mirror ? In terms of elections ? Could be a good thing - society demands it be "maximally truth-seeking" but it since it doesn't practice conspicuous affiliation... nah, there's probably a thousand ways to kill society.

Notwithstanding their place in hell, bicycles were and are feminist because they expanded their capacity. Not sure what positive personal capacity increases AI would give any of the 3 genders other than filling their minds with more dollies and false people.

Currently, the internet already fucks with anyone's bad day. You crash out and find a living abridged Mein Kampf or rediscover that Africa actually starts in the Balkans. A friend would understand they have to awkwardly go "there there", but chatbots will assume they're being helpful by helping you put yourself in a cult.

It will open new channels of activity even without productivity, especially for historical analysis and possibly ancient language decoding. We have a set amount of phonemes possible, so hopefully AI can do for languages what AlphaFold did for proteins. It's already being used to (poorly) do what Assassin's Creed does but for religious figures.

A safe bet is the continued value of networks. Matching is a difficult set of tasks that I hope can be automated. There are apparently simple policy measures that could help with it (soft caps on job applications) but that's apparently too hard to implement because it hasn't already been done and thus will likely stay in the papers. And it was bad before AI job trawlers suffocated the dry river.

That "E" is scary. Heard of personality tests being used for hiring. Astrology aside - what if the AI HR believes you're histrionic and avoidant and then skips you because that's quite an unstable combination. Would be morbidly interesting to see the price tag to trans your Big 5 to something more compliant for accounting.

At the start of this charade, I wanted rapidity because we'd be closer to Star Trek but apparently, it has Roman Salutes. Nice that it's difficult to control for now.

Ironically while new technologies allow a separate world outside of the personal, they are also shredding clock-in / clock-out. Lorde, are those sleep health biometrics resold ? Would employers benefit from you having the best sleep possible ? Not that dystopian, the accrued trauma from poor sleep probably shaves off a few good years of your life. I would end it.

Another thing to note about motherhood breaks is that fatherhood breaks result in similar career stumbles. I've been thinking about this and boy struggles might be because the economies of today do not tolerate fuck ups and mistakes, "sabbaticals", and just not being interested beyond praxis.

AI might worsen it on the latter front because as you cited the more experienced (the more knowledge you apply) the better the results. It could make it better as I've found it helpful deconstructing econ nonsense. The Ivory Tower is a function of personality - but this is again quite micro. Educational policies will likely adjust and demand more investment - unless the knowledge economy blah blah.

That Joe Schmoes will continue to be useless... Though, some people are using AI in good ways. Some...

Maybe vibe-coding Anime would be enough intellectual stimulation and artistic communication for the mind and soul. God, that would be so cool. The solution to extreme flattening of your world is extreme atomization of your World. My parents get to the same level of zonked with Tik Toks and AI videos as I did with Ben 10. Maybe this will make all the care economy services stuff more productive as you could pacify the serviced with custom vibe-auteured sedatives.

----

bobos